We are in a bind.

In recent years, the rapid advancement of high powered computing has opened a world of productivity gains. Workers’ performance could improve by 40% with the assistance of generative AI compared to those who don’t use these tools. AI also has tremendous potential to unlock solutions for the world's toughest problems, namely climate change and health inequity. We are seeing new and novel climate prediction models, air pollution trackers, and rare earth materials thanks to AI.

However, there are real and tangible climate costs to this compute. In 2022, data centers, cryptocurrencies, and AI used up to 2% of global energy demand, or 460TWh of electricity. Just a few weeks ago, a DoE official stressed how important additional energy sources on the grid were in order to meet AI demand. By 2026, it is estimated that the cost of compute could more than double to 1,050TWh according to the IEA. This could be 8% of total energy use globally.

Reasons to be hopeful: AI mitigating climate change

AI technologies are uniquely positioned to enhance our responses to climate change. In a recent report, BCG estimated that AI could mitigate between 5-10% of global greenhouse gas emissions by 2030, equivalent to the total annual emissions of the European Union. This potential is largely due to AI’s ability to optimize energy use, improve resource management, and enhance the efficiency of industrial operations.

In a nod to VCs, this GreenFin article also highlighted how AI will support investment into climate solutions by helping identify companies, startups, and comparatives for investors.

Reasons to be doubtful: Resource intensity in compute

The deployment of AI itself comes with significant environmental costs. According to the OECD, large machine learning models are projected to use over 85.4 terawatt-hours of electricity each year by 2027 — surpassing the total electricity usage of Portugal.

Electricity consumption for computing is not trivial. For example:

Data centers, cryptocurrencies, and AI consumed approximately 460 TWh of electricity, nearly 2% of the global electricity demand.

The breakdown is as follows: computing (40%), cooling (40%), and other IT equipment (20%). Many tech companies don’t give a detailed breakdown of how this breaks down in practice, but Google has disclosed that machine learning accounts for 10-15% of data center energy usage.

Some of the best papers on the topic are written by Patterson’s research team (see here or here). They show the relative improvement of CO2e emissions by applying best practices in (1) model (efficient ML model architectures), (2) machine (using more recent GPUs vs. general-purpose processors), (3) mechanization (computing in the cloud vs. on premise), and (4) map (cloud computing means practitioners can pick ‘clean’ locations with renewable energy).

The figure below shows that Google was able to reduce energy consumption by 83x and CO2e by 747x over four years while maintaining a steady quality bar.

Data center energy sources vary greatly. For example, many centers in Western Europe and the northern United States operate on close to 100% renewable energy, while data centers in the ASEAN region are more mixed.

As a result, model emissions can vary greatly from model to model. Of course, not all of the differences below are attributable to energy usage, but GLaM’s 11x relative difference on CO2e and 2.8x greater energy efficiency. GLaM was trained in the Oklahoma datacenter where tCO2d per MWh was 5x lower than where GPT-3 had trained.

Climate impacts range beyond electricity usage. AI models also demand significant water resources for cooling.

The Scope-1 view on water consumption: onsite water consumption for server cooling is notably high. This includes water evaporated in cooling towers and other cooling methods essential to handle the heat from the high power densities of AI servers.

The Scope-2 view on water consumption: offsite water consumption, necessary for generating the electricity that powers AI operations, substantially increases AI's water footprint. This category includes water used at thermal power plants and in hydroelectric processes.

Taking a step back, energy usage will only grow as the need for compute increases. Projections for 2027 indicate that:

AI may need between 4.2 to 6.6 billion cubic meters of water, amounting to the annual water withdrawal of multiple countries.

The combined scope-1 and scope-2 water consumption is expected to surpass 0.38 to 0.60 billion cubic meters annually.

Innovations in Green Compute

To ensure AI ‘does no significant harm’ (borrowing from the SFDR framework), incumbents and innovators need to find ways to reduce the energy and water intensity of data centers and other tangential AI products. They could adopt energy-efficient algorithms and prioritizing the development of AI systems that reduce carbon emissions and advance climate mitigation, adaptation, and resilience efforts.

Carburacy, a metric developed by G. Moro, Luca Ragazzi, and Lorenzo Valgimigli. Presented at the AAAI Conference in 2023, Carburacy uniquely measures both the effectiveness and the environmental impact of AI models. This dual metric encourages the development of AI systems that are not only high-performing but also low in carbon emissions, offering a balanced approach to technological advancement and environmental responsibility.

Carburacy score

Carburacy score (C = 0); the dashed lines are the derivatives. B shows different behaviors by varying β and R (α = 10). C reports the relation between ∆C, the difference of carbon emissions, and the effectiveness of keeping Carburacy stable using different α values, starting from a model with R = 0.4 and Υ · β = 0.5.

Other innovations include:

Cooling. Innovations such as high-efficiency cooling systems and machine learning optimizations for cooling and server operations are being developed to curb energy consumption. Vigilant was one of the first players in this space, backed by Accel in 2012.

Quantum & photonics computing. Quantum computing and Hyperscale Data Centers are seen as long-term solutions for reducing energy use while managing larger data loads.

Model optimisation. Increasingly efficient underlying AI & LLM models, as Sam Altman spoke about here. This primer from DataTonic is a great deep dive around how fine-tuning, cold-start, and inference each impact overall model emission costs.

Chip design. Better chip design with a focus on sustainable mining materials and increased efficiency at the component level.

Incumbents are also making strides in innovating on green compute. For example:

Microsoft is exploring submerged liquid cooling technology to boost energy efficiency in its data centers.

Amazon implements edge computing to shorten data transmission distances, cutting down on energy consumption and latency.

Google invests heavily in renewable energy projects like wind and solar farms to power its data centers with clean energy.

IBM employs artificial intelligence to streamline data center operations, resulting in energy savings and enhanced performance.

Finnish energy company Fortum recycles waste heat from data centers to heat homes and buildings in Helsinki, showcasing a sustainable approach to energy usage.

Governments are taking note of the carbon cost of compute

Decarbonization efforts are intensifying across the globe, with several regions implementing targeted strategies to reduce the environmental impact of their digital infrastructures.

In Europe, for example, the Climate Neutral Data Centre Pact is setting ambitious goals for the continent's data centers to achieve climate neutrality by 2030. This initiative is part of a broader commitment to sustainability, mandating strict operational standards that promote the use of renewable energy sources and enhance overall energy efficiencies. The European Green Deal also sets bold policies to get to climate neutrality by 2050, some of which revolves around energy usage in data centers.

Simultaneously, in the United States and China, significant regulatory steps are being taken to ensure that data centers not only operate more efficiently but also increasingly rely on renewable energy sources. The US has Renewable Energy Tax Credits which are incentives to encourage companies to adopt renewable energy sources for powering data centers.

There are bilateral agreements such as the Dutch Data Center Association Green Deal which links government and data center operators to directly encourage energy efficiency.

These regulations are designed to create a sustainable technological ecosystem, integrating environmental considerations directly into the core operational strategies of data center management.

Example: Microsoft's Sustainability Challenge with AI

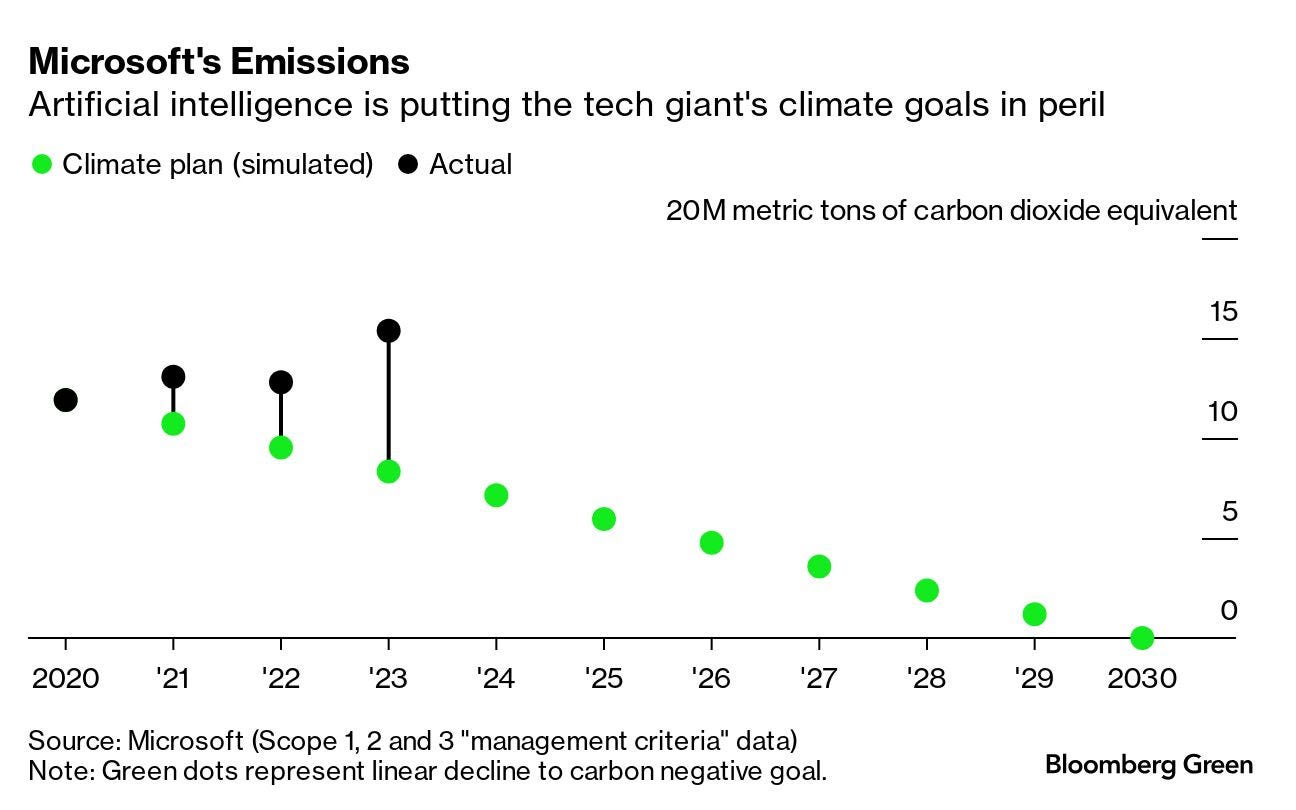

In 2020, Microsoft set one of the tech industry's most ambitious climate goals: to become carbon negative by the end of the decade. However, the company's drive to lead in the burgeoning field of AI is now complicating these plans. The growth in AI has significantly increased Microsoft's carbon footprint, making the journey to carbon negativity even more arduous than anticipated.

As of 2024, Microsoft reports a 30% increase in its environmental impact compared to four years earlier. This rise is largely due to the exponential demand for AI technologies, which not only heightens the operation of existing data centers but also necessitates the construction of new facilities. These new constructions are heavily reliant on carbon-intensive materials such as steel and concrete, further exacerbating the challenge.

Microsoft is now forced to accelerate its strategies for adopting greener technologies and materials, such as less carbon-intensive chips and green construction materials, to meet its sustainability targets. The company is also actively pursuing large-scale carbon dioxide removal (CDR) strategies. In fiscal year 2023, Microsoft contracted over 5 million tonnes of carbon removal, more than 3 times the amount of the previous year. Notably, their partnership with Heirloom to purchase up to 315,000 metric tons of CO2 removal represents one of the largest deals of its kind to date.

The case of Microsoft illustrates the dual challenges Tech companies face in balancing ambitious AI developments with their environmental responsibilities. This scenario is not unique to Microsoft but is indicative of a larger trend among tech giants striving to reconcile rapid technological innovation with sustainable practices.

Embracing the AI shift, with the necessary climate safeguards

There is no doubt that AI is the next technological shift in our society.

The carbon cost of compute needs to be factored in to this shift, and mitigated accordingly. Gartner talks about this as the "Two Concepts of Sustainable AI": the need to manage AI’s own environmental footprint while maximizing its capabilities to drive global sustainability efforts.

As we continue to explore these concerns, the journey toward sustainable AI not only promises technological innovation but also a pathway to a world of sustainable consumption.

Authors

This article was co-authored by Estia Ryan, Principal & Head of Research at Eka Ventures, and Arthur Bessieres, Ventures Lead for France at Plug and Play Tech Center.